Avoid noise and preserve context

Essentially, it’s about breaking down large text content into manageable parts to optimize the relevance of the content we retrieve from a vector database using LLM.

This reminds me of semantic search. In this context, we index documents filled with topic-specific information.

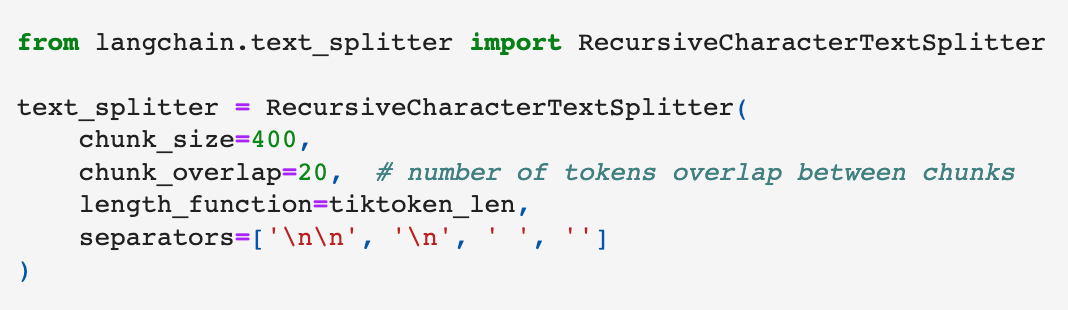

If our chunking is done just right, the search results align nicely with what the user is looking for. But if our chunks are too tiny or too gigantic, we might overlook important content or return less precise results. Hence, it’s crucial to find that sweet spot for chunk size to make sure search results are spot-on.

OpenAIEmbeddings

The OpenAIEmbeddings class is a wrapper around OpenAI’s API for generating language embeddings. It provides a way to use the API’s services, either through the default OpenAI service or via an Azure endpoint.

Here are some highlights and insights about the key components of the OpenAIEmbeddings class, as described in this documentation:

- Initialization: An instance of

OpenAIEmbeddingsis initialized with an OpenAI API key. The key can be passed as a named parameter to the constructor (openai_api_key="my-api-key"), or it can be set as theOPENAI_API_KEYenvironment variable. - Azure support: This class also supports using the OpenAI API through Microsoft Azure endpoints. If you’re using Azure, you need to set several environment variables (

OPENAI_API_TYPE,OPENAI_API_BASE,OPENAI_API_KEY, andOPENAI_API_VERSION). TheOPENAI_API_TYPEshould be ‘azure’ and the rest should correspond to your Azure endpoint’s properties. - Proxy support: The

OPENAI_PROXYenvironment variable is also mentioned, which would be used to set a proxy for your API calls, if needed. - Class methods: The class provides two main methods:

embed_documents(texts: List[str], chunk_size: Optional[int] = 0) → List[List[float]]: This method generates embeddings for a batch of texts. You pass it a list of strings and optionally a chunk size (which sets the number of texts to be embedded per batch). It returns a list of lists of floats, where each inner list is the embedding of a single text.

embed_query(text: str) → List[float]: This method generates an embedding for a single piece of text. You pass it a string and it returns a list of floats as the embedding. - Configurable fields: The class also has three configurable fields:

chunk_size,max_retries, andrequest_timeout. These fields allow you to customize the batch size for embedding generation, the maximum number of retries when generating embeddings, and the timeout for the API request, respectively.

Overall, the OpenAIEmbeddings class appears to be a useful and flexible tool for working with OpenAI’s language embedding API in Python, including advanced features like batching, retries, and support for both OpenAI and Azure endpoints.

Conversational Agents with Knowledge

You see, these agents utilize embedded chunks to form a knowledge-based context, which is crucial for providing accurate responses. However, the size of our chunks is important here. A chunk that’s too large might not fit within the context, especially considering the token limit we have when sending requests to an external model provider like OpenAI. So, we need to tread carefully with chunk sizes here.

Chunking Strategies

We can follow various chunking strategies, but the trick is in understanding your specific use case and choosing the right approach that offers a good trade-off between size and method.

I want to focus on talking about how we embed short and long content.

When we embed a sentence, the resulting vector zeroes in on that specific sentence’s meaning. But when we embed larger chunks like paragraphs or entire documents, the vector considers the broader context, the relationships between the sentences, and the themes within the text. It’s richer but can introduce noise or dilute key points.

Query length is another fascinating aspect. Short queries might match well with sentence-level embeddings, while longer ones looking for broader context might resonate more with paragraph or document-level embeddings.

Several factors can influence our choice of chunking strategy:

We need to consider the content we’re indexing, the embedding model, the expected user queries’ complexity, and how the results will be used in our application. All these factors would help fine-tune our chunking strategy.

There are different chunking methods to explore as well:

- Fixed-size chunking

- Content-aware chunking

- Recursive chunking

- Specialized chunking

While fixed-size chunking is the most common and straightforward approach, other methods like content-aware chunking and specialized chunking can take advantage of the content’s nature and structure, respectively.

Figuring out the best chunk size for your application can be challenging. It requires:

Pre-processing data

- Selecting a range of chunk sizes to test.

- Iteratively evaluating performance until you find the optimal chunk size.

And remember, the «best» chunk size might differ from one application to another.

In the end, chunking is straightforward most of the time, but it can get tricky depending on your specific needs.

There is no one-size-fits-all solution

The best advice I can give is to understand your use case deeply and experiment until you find the right approach.

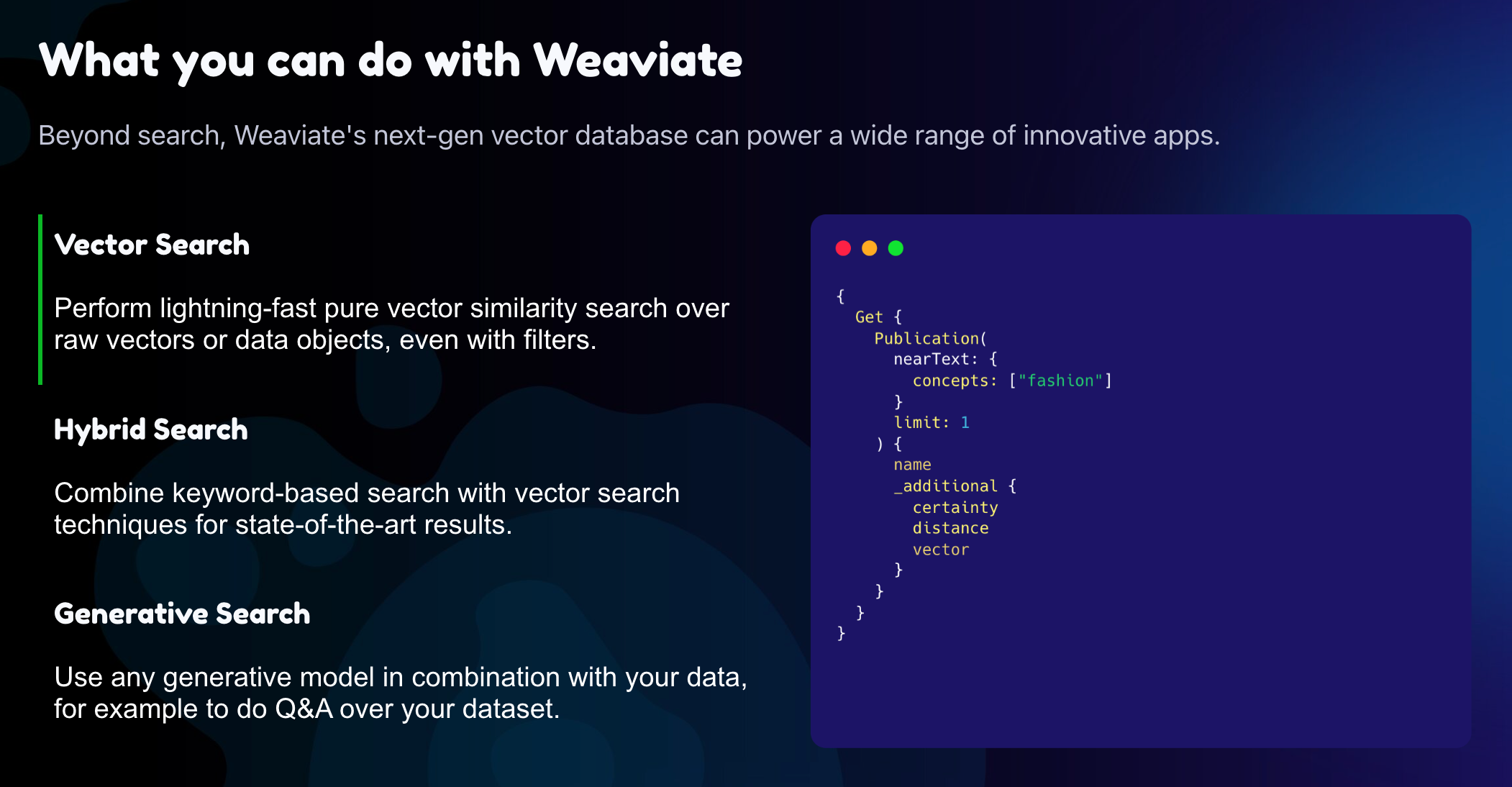

Weaviate’s text2vec-openai Module

An exciting and versatile tool that paires the power of OpenAI’s language models with Weaviate’s data infrastructure.

The use of this module involves a third-party API and may lead to additional costs, so always remember to consult the OpenAI pricing page before scaling up your operations.

What sets the text2vec-openai module apart is its seamless interaction with OpenAI or Azure OpenAI. With this flexibility, you can utilize OpenAI’s cutting-edge models to represent your data objects effectively within Weaviate or Azure.

Weaviate made it easy to work with both service providers, just make sure you follow the appropriate instructions for the provider you choose.

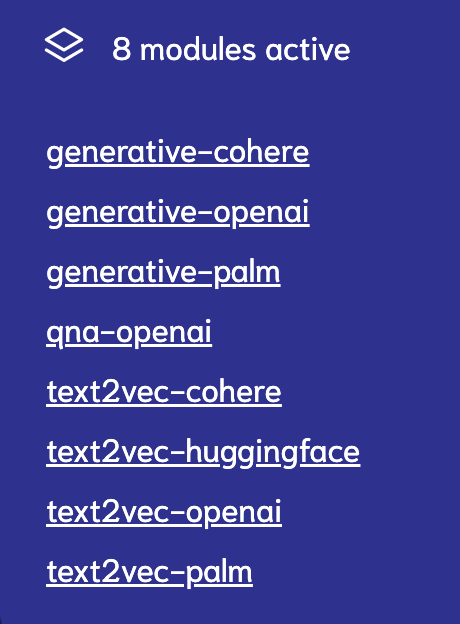

You’ll be glad to know that the module comes ready-to-use and pre-configured on Weaviate Cloud Services.

If you’re using Weaviate open source, you can enable it easily in your configuration file, like docker-compose.yaml, and set it as your default vectorizer module.

Here’s a brief example of how to set it up:

version: '3.4'

services:

weaviate:

image: semitechnologies/weaviate:1.19.6

restart: on-failure:0

ports:

- "8080:8080"

environment:

QUERY_DEFAULTS_LIMIT: 20

AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED: 'true'

PERSISTENCE_DATA_PATH: "./data"

DEFAULT_VECTORIZER_MODULE: text2vec-openai

ENABLE_MODULES: text2vec-openai

OPENAI_APIKEY: sk-foobar # Optional; you can provide the key at runtime.

AZURE_APIKEY: sk-foobar # Optional; you can provide the key at runtime.

CLUSTER_HOSTNAME: 'node1'

...For configuration, you can either embed your OpenAI or Azure API key directly in the environment settings or provide it at runtime.

With our module, you can easily adjust the vectorizer model and behavior through your schema.

Here’s how you might define a document class with OpenAI settings:

{

"classes": [

{

"class": "Document",

"description": "A class called document",

"vectorizer": "text2vec-openai",

"moduleConfig": {

"text2vec-openai": {

"model": "ada",

"modelVersion": "002",

"type": "text"

}

},

}

]

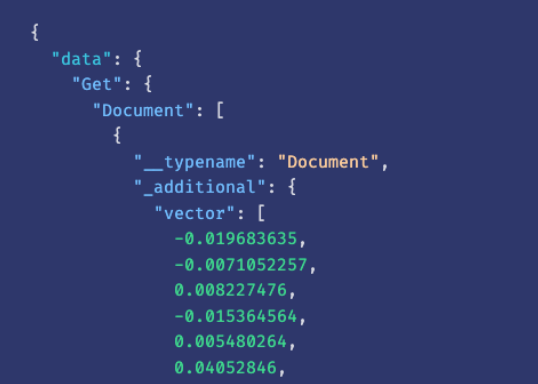

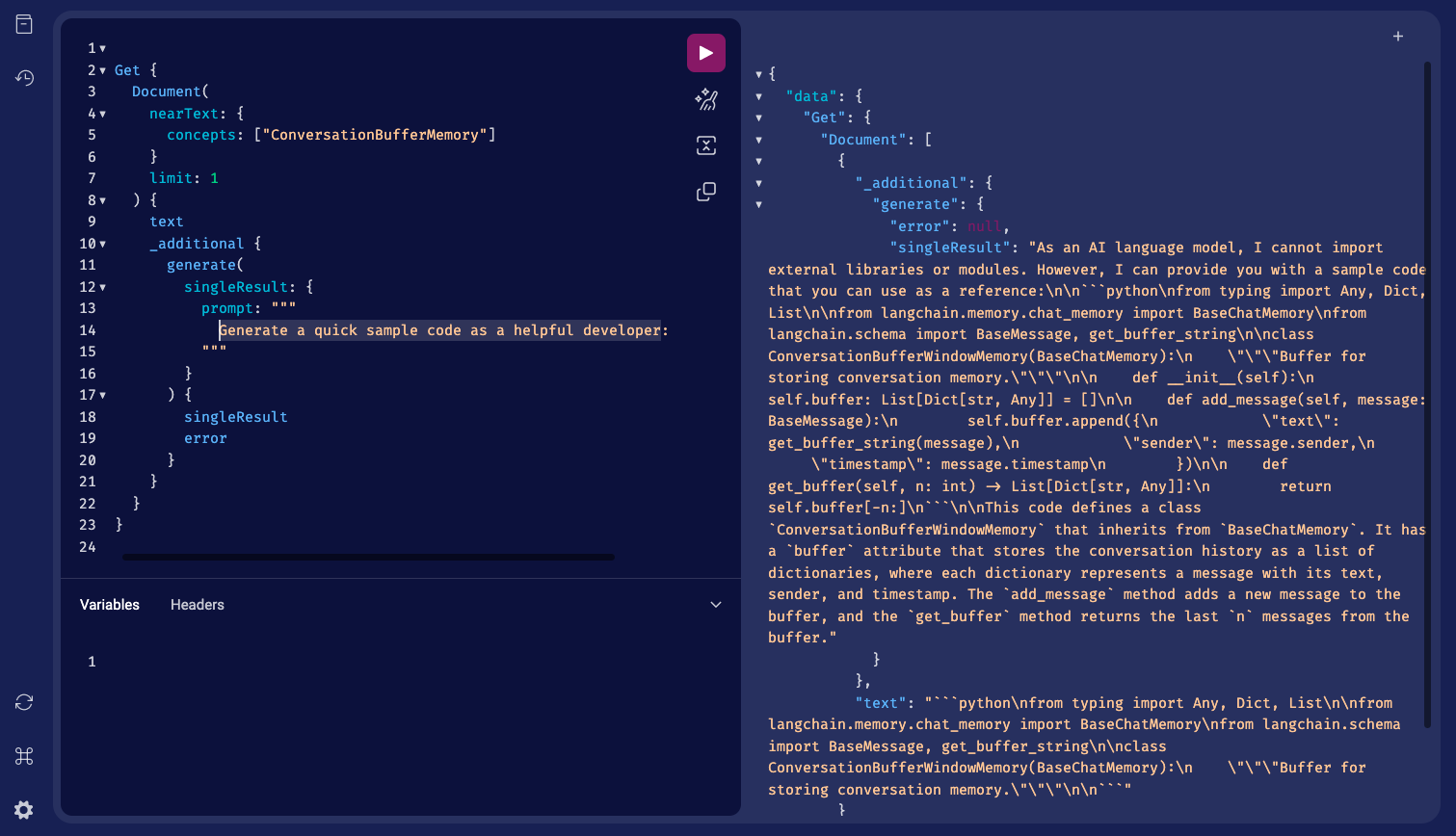

}GraphQL vector search operators

Weavoate’s text2vec-openai module also allows GraphQL vector search operators, providing an API key during the query if it isn’t set in the configuration.

For instance, here’s how to pass the key via the HTTP header:

X-OpenAI-Api-Key: YOUR-OPENAI-API-KEY

X-Azure-Api-Key: YOUR-AZURE-API-KEYRemember that you have the freedom to choose from OpenAI’s various models for document or code embeddings, such as ‘ada‘, ‘babbage‘, ‘curie‘, and ‘davinci‘.

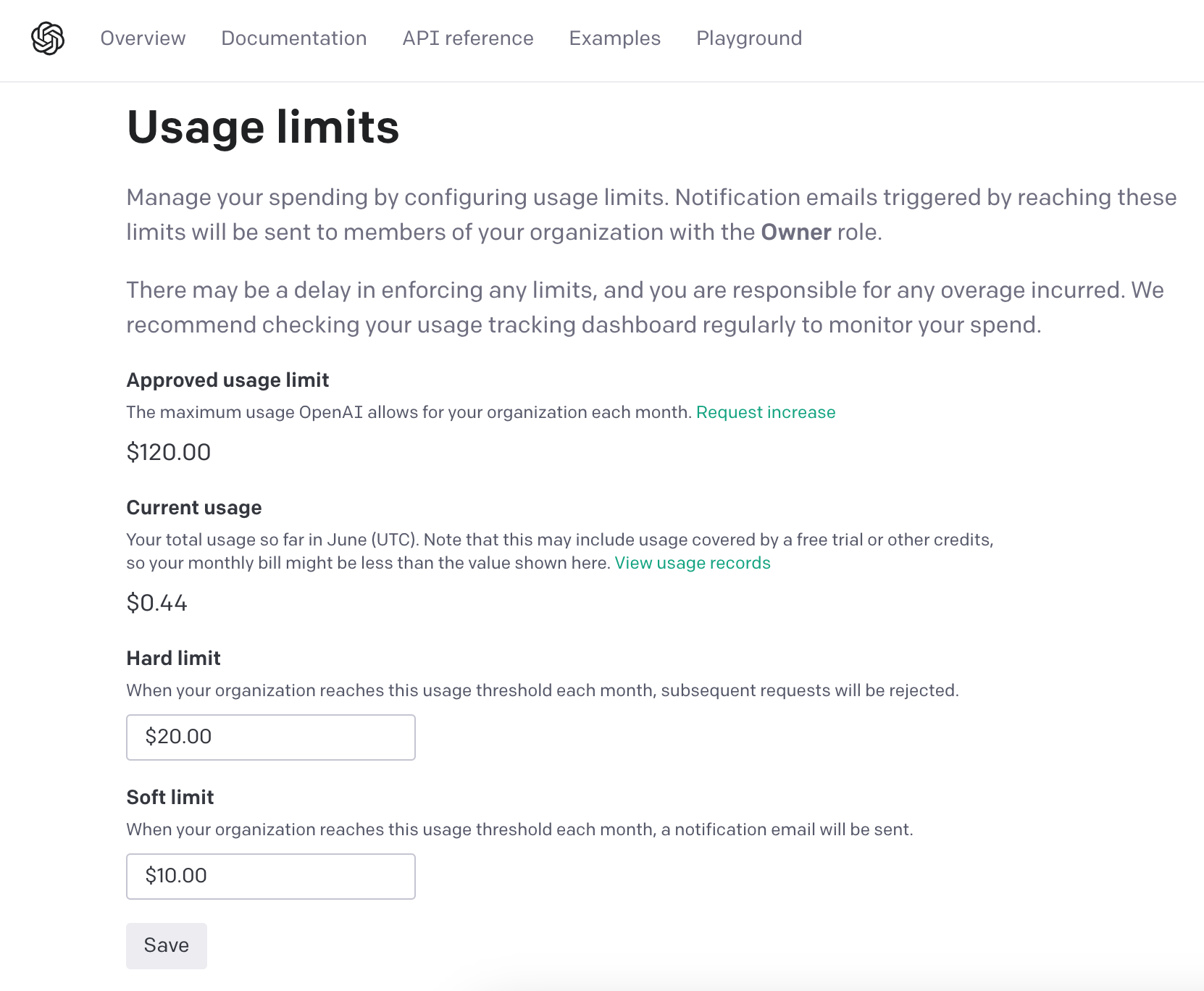

I recommend being mindful of OpenAI’s rate limits when using your API key.

If you reach your limit, you’ll receive an error message from the OpenAI API.

And of course, Weaviate got tools in place to help manage these rate limits, such as the ability to throttle the import within your application, as illustrated in the Python and Java client examples.

So, what are you waiting for? Start using Weaviate’s text2vec-openai module and unlock the world of enhanced data representation with the power of OpenAI.